Combating Hate Speech with OpenAI-Powered Filters

Combating Hate Speech with Open AI-Powered Filters" summarizes OpenAI's efforts to use artificial intelligence technology to detect and prevent hate speech on various online platforms. These filters are designed to automatically identify and remove offensive and harmful content, promoting a safer and more inclusive online environment.

Hate speech has become a pervasive issue in today's digital age, with the internet providing a platform for its dissemination. The consequences of hate speech can be severe, leading to harassment, discrimination, and even violence. To combat this problem, technology companies and researchers have been developing AI-powered filters that can automatically detect and prevent hate speech in online content.

OpenAI's AI models, including GPT-3, offer powerful tools for building these filters and other AI development services. In this article, we will explore how to combat hate speech using OpenAI's AI-powered filters and provide code snippets to get you started.

Understanding Hate Speech

Hate speech refers to any form of communication, whether spoken, written, or symbolic, that discriminates, vilifies, or incites violence or prejudice against individuals or groups based on attributes such as race, ethnicity, religion, gender, sexual orientation, or disability.

Detecting hate speech manually is a daunting task due to the sheer volume of content posted online. AI-powered filters offer an efficient and scalable solution to this problem.

Using OpenAI's GPT-3 for Hate Speech Detection

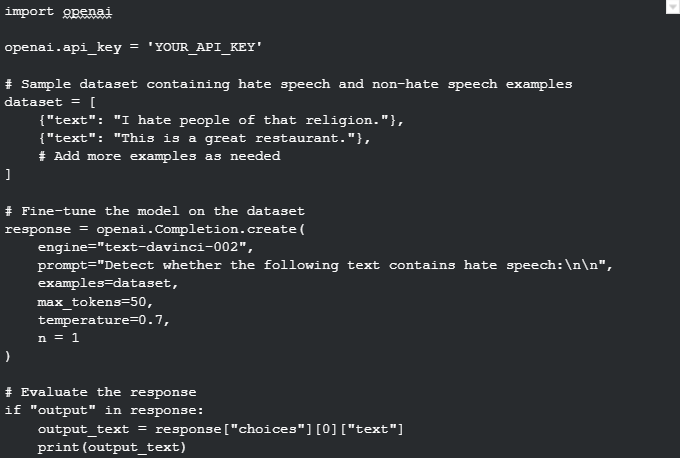

OpenAI's GPT-3 is a state-of-the-art language model capable of understanding and generating human-like text. To use GPT-3 for hate speech detection, we need to fine-tune it on a dataset containing examples of hate speech and non-hate speech. Below are code snippets to help you get started:

Installing OpenAI's Python Package

![]()

Fine-Tuning GPT-3 for Hate Speech Detection

This code snippet demonstrates how to fine-tune GPT-3 using a dataset containing hate speech and non-hate speech examples. The model can then be used to classify text as hate speech or not.

Challenges and Considerations

While OpenAI's GPT-3 can be a valuable tool in combating hate speech, there are several challenges and considerations to keep in mind:

1. Data Bias

Models like GPT-3 can inherit biases present in the training data. Careful preprocessing and post-processing are essential to minimize biased outputs.

2. Ethical Concerns

Decisions about what constitutes hate speech can be subjective. Striking the right balance between free speech and preventing harm is a complex ethical challenge.

3. False Positives and Negatives

No model is perfect, and there will be instances of false positives (flagging non-hate speech as hate speech) and false negatives (missing hate speech). Continuous evaluation and improvement are crucial.

4. Scalability

As online content is continuously generated, hate speech detection systems must be scalable to handle large volumes of data in real time.

Conclusion

OpenAI's AI-powered models, such as GPT-3, offer a powerful solution to combat hate speech by automating the detection process. By fine-tuning these models on labeled datasets, developers can create effective hate speech filters that can be integrated into online platforms to promote a safer and more inclusive digital environment.

Ready to Explore More About Our AI Solutions?

Get custom solutions, recommendations, estimates, confidentiality & same day response guaranteed!

However, it's essential to approach the deployment of such filters with careful consideration of ethical concerns and an ongoing commitment to reducing biases and improving accuracy.

%201-1.webp)

.png?width=344&height=101&name=Mask%20group%20(5).png)